网站备案号 链接新郑网络推广

目录

1、快速了解wsl2 安装子系统linux流程(B站视频)

2、wsl2常用命令

3、windows与子系统Linux文件访问方法

4、子系统linux使用windows网络代理、网络配置(镜像网络,非NAT)

5、wsl2 Ubuntu miniconda 安装

6、wsl2 docker安装

7、wsl2 cuda 安装

8、wsl2 子系统可视化

重要提示:本教程仅仅针对最新版本的win11系统,其他版本的系统暂无测试

1、快速了解wsl2 安装子系统linux流程(B站视频)

【windows 安装适用于linux的子系统,安装 docker 并开启局域网访问

2、wsl2常用命令

WSL 的基本命令

#升级下子系统

wsl --update # 查看支持内核版本 wsl版本是2.x

wsl -v

WSL 版本: 2.2.4.0

内核版本: 5.15.153.1-2# 如果wsl不是2.x, 将WSL2设置为默认版本

wsl --set-default-version 2# 查看已经安装的子系统

wsl -l -v#查看可安装的linux版本

wsl --list --online#安装Ubuntu-22.04 发行版本

wsl --install -d ubuntu-22.04# 启动子系统

wsl -d Ubuntu-22.04 # 这里启动Ubuntu 22.04使用的命令和配置

设置wsl版本:wsl --set-default-version 2

查看可以安装的发行版:wsl --list --online

安装ununtu:wsl --install -d Ubuntu-22.04

关闭wsl:wsl --shutdown.wslconfig配置文件在这个位置

%UserProfile%

C:\Users\<UserName>\.wslconfig查看子系统ip:hostname -I

端口转发:netsh interface portproxy add v4tov4 listenport=5244 listenaddress=0.0.0.0 connectport=5244 connectaddress=172.27.14.63

查看转发的端口:netsh interface portproxy show all

删除:netsh interface portproxy delete v4tov4 listenport=5244 listenaddress=0.0.0.0.wslconfig配置

[wsl2]

networkingMode=mirrored

hostAddressLoopback=true3、windows与子系统Linux文件访问方法

WSL 的文件权限 | Microsoft Learn

4、子系统linux使用windows网络代理、网络配置(镜像网络,非NAT)

使用 WSL 访问网络应用程序

步骤:

下面配置仅限为win11系统,配置子系统Ubuntu网络为镜像网络,方便使用windows的网络代理

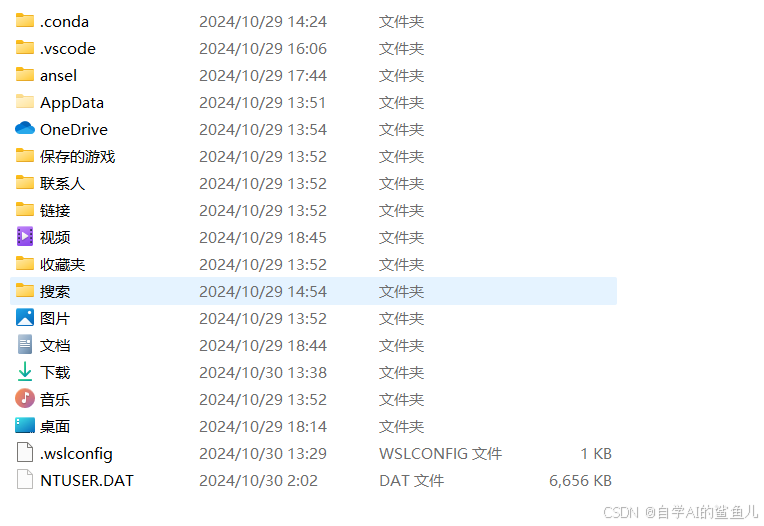

1) windows资源管理器输入:%UserProfile%后,跳转至用户目录:

2) 找到.wslconfig文件,没有则创建一个该文件即可

写入下面内容

# Settings apply across all Linux distros running on WSL 2

[wsl2]# Limits VM memory to use no more than 4 GB, this can be set as whole numbers using GB or MB

# memory=8GB# Sets the VM to use two virtual processors

processors=10[experimental]

autoMemoryReclaim=gradual # 开启自动回收内存,可在 gradual, dropcache, disabled 之间选择

networkingMode=mirrored # 开启镜像网络

dnsTunneling=true # 开启 DNS Tunneling

firewall=true # 开启 Windows 防火墙

autoProxy=true # 开启自动同步代理

sparseVhd=true # 开启自动释放 WSL2 虚拟硬盘空间3) PowerShell中输入 wsl --shutdown 关闭所有的子系统,注意不是关闭终端(重要)

4)再次启动,使.wslconfig 配置生效

wsl -d Ubuntu-22.04

5)验证子系统是否使用windows代理成功

windows的代理需要开启Tun模式,如果未开启Tun模式,可能需要在Ubuntu终端使用export 开启环境变量(临时生效,永久生效需要更改配置文件),命令为:

# 如果是镜像网络

export https_proxy=http://127.0.0.1:7890

export http_proxy=http://127.0.0.1:7890

# 如果是NAT网络,需要在配置文件里加入:

# wsl http proxy

# 动态获得主机ip地址

HOST_IP=$(ip route show | grep -i default | awk '{ print $3}')

export https_proxy=$HOST_IP:7890

export http_proxy=$HOST_IP:7890# 对于非镜像网络,如果需要局域网互通,可能还得需要一个端口映射

# 这里使用curl来验证,不用ping,因为ping默认不支持http代理curl -I https://www.baidu.com# 结果:

HTTP/1.1 200 Connection establishedHTTP/1.1 200 OK

Accept-Ranges: bytes

Cache-Control: private, no-cache, no-store, proxy-revalidate, no-transform

Connection: keep-alive

Content-Length: 277

Content-Type: text/html

Date: Wed, 30 Oct 2024 05:55:33 GMT

Etag: "575e1f71-115"

Last-Modified: Mon, 13 Jun 2016 02:50:25 GMT

Pragma: no-cache

Server: bfe/1.0.8.18

# 这里使用curl来验证,不用ping,因为ping默认不支持http代理

curl -I https://www.google.com# 结果为下面代表成功,如果什么未出现卡住代表失败:

HTTP/1.1 200 Connection establishedHTTP/2 200

content-type: text/html; charset=ISO-8859-1

content-security-policy-report-only: object-src 'none';base-uri 'self';script-src 'nonce-V1HCEWjM22NLtR0v_UYszA' 'strict-dynamic' 'report-sample' 'unsafe-eval' 'unsafe-inline' https: http:;report-uri https://csp.withgoogle.com/csp/gws/other-hp

accept-ch: Sec-CH-Prefers-Color-Scheme

p3p: CP="This is not a P3P policy! See g.co/p3phelp for more info."

date: Wed, 30 Oct 2024 05:55:47 GMT

server: gws

x-xss-protection: 0

x-frame-options: SAMEORIGIN

expires: Wed, 30 Oct 2024 05:55:47 GMT

cache-control: private

set-cookie: AEC=AVYB7co_o4i9i6JponI0fUy0GPIrYVSoGc5rdk2IyBuVdZQF5ljfIn8euNo; expires=Mon, 28-Apr-2025 05:55:47 GMT; path=/; domain=.google.com; Secure; HttpOnly; SameSite=lax

set-cookie: NID=518=dQebAMHHJPU9hSZIshAFzUHWMPFEZObPIMl6ZMjmay5duwQLkum7Hd44ikEgyVDZVMRlNWAWsOs5sNB2eSsrGTzms7iByOR11e3ZfvyODGfhEId-r6KzJ80y5Fhxvt7C-ecSOoCrBIUgsREJ6ZNFauGHkwwgob-61jKhK81iz5Y7UXQYGpH5Jamb74rLKrpw; expires=Thu, 01-May-2025 05:55:47 GMT; path=/; domain=.google.com; HttpOnly

alt-svc: h3=":443"; ma=2592000,h3-29=":443"; ma=25920005、wsl2 Ubuntu miniconda 安装

安装: mkdir -p ~/miniconda3wget https://repo.anaconda.com/miniconda/Miniconda3-latest-Linux-x86_64.sh -O ~/miniconda3/miniconda.shbash ~/miniconda3/miniconda.sh -b -u -p ~/miniconda3rm ~/miniconda3/miniconda.sh安装完成后激活:

source ~/miniconda3/bin/activate环境变量初始化:

conda init --all

6、wsl2 docker安装

WSL 上的 Docker 容器入门 | Microsoft Learn

在windows/wsl 安装docker(官方文档)

7、wsl2 cuda 安装

CUDA on WSL 用户指南(nvidia官方文档)

win11 使用 wsl2 安装子linux系统,使用 nvidia-smi 是可以打印东西的,即使 nvidia-smi 可用,CUDA 工具包可能尚未安装或未正确配置。例如 nvcc -V 命令不可用、安装flash-atten 要用到CUDA 编译(pip install flash-attn==2.6.3 --no-build-isolation ) 会报错

需要注意的:

- Nvidia 图形驱动只需要装到windows机器上, wsl 子系统中不要安装任何图形驱动,仅需要安装cuda toolkit 即可。From this point you should be able to run any existing Linux application which requires CUDA. Do not install any driver within the WSL environment. For building a CUDA application, you will need CUDA Toolkit. Read the next section for further information.

- 最新的 NVIDIA Windows GPU 驱动程序将完全支持 WSL 2。通过驱动程序中的 CUDA 支持,现有应用程序(在其他 Linux 系统上为相同目标 GPU 编译)可以在 WSL 环境中无需修改即可运行。要编译新的 CUDA 应用程序,需要 Linux x86 的 CUDA Toolkit。CUDA Toolkit 对 WSL 的支持仍处于预览阶段,因为诸如分析器等开发工具尚不可用。但是,在 WSL2 环境中完全支持 CUDA 应用程序开发,因此用户应该能够使用适用于 x86 Linux 的最新 CUDA Toolkit 编译新的 CUDA Linux 应用程序。

- 在系统上安装 Windows NVIDIA GPU 驱动程序后,CUDA 就可以在 WSL 2 中使用。安装在 Windows 主机上的 CUDA 驱动程序将在 WSL 2 中被存根为

libcuda.so,因此用户不得在 WSL 2 中安装任何 NVIDIA GPU Linux 驱动程序。这里需要非常小心,因为默认的 CUDA Toolkit 附带有一个驱动程序,很容易用默认安装覆盖 WSL 2 的 NVIDIA 驱动程序。我们建议开发人员使用来自 CUDA Toolkit 下载页面的单独 WSL 2 (Ubuntu) CUDA Toolkit,以避免这种覆盖。有一个叫 WSL-Ubuntu CUDA 工具包安装程序不会覆盖已映射到 WSL 2 环境的 NVIDIA 驱动程序。

以下是在 WSL 中安装 CUDA 工具包的步骤:Linux WSL-Ubuntu 2.0 x86_64

wget https://developer.download.nvidia.com/compute/cuda/repos/wsl-ubuntu/x86_64/cuda-wsl-ubuntu.pin

sudo mv cuda-wsl-ubuntu.pin /etc/apt/preferences.d/cuda-repository-pin-600

wget https://developer.download.nvidia.com/compute/cuda/12.6.2/local_installers/cuda-repo-wsl-ubuntu-12-6-local_12.6.2-1_amd64.deb

sudo dpkg -i cuda-repo-wsl-ubuntu-12-6-local_12.6.2-1_amd64.deb

sudo cp /var/cuda-repo-wsl-ubuntu-12-6-local/cuda-*-keyring.gpg /usr/share/keyrings/

sudo apt-get update

sudo apt-get -y install cuda-toolkit-12-6正常来说,如果可以顺利安装上 torch GPU版、deepspeed、flash-atten,CUDA基本算是安装完成了,尤其是后两个包,flash-atten需要用到CUDA编译,如果没有安装或安装出错大概率是安装不成功的

测试代码:

import torch

import flash_attn

import deepspeed

import xformers

print(deepspeed.__version__)

print(xformers.__version__)

print(flash_attn.__version__)

print(torch.__version__)

print(torch.cuda.is_available())

print(torch.cuda.device_count())

print(torch.cuda.get_device_name(0))

print(torch.cuda.current_device())# 结果

0.14.0

0.0.27

2.6.3

2.3.1+cu121

True

1

NVIDIA GeForce RTX 3090

0一些问题:

经上述py脚本测试,CUDA已经安装成功了,但是nvcc -V命令依旧用不了,原因为CUDA的安装路径通常需要添加到系统的PATH和LD_LIBRARY_PATH中。如果这些环境变量未正确配置,系统将无法找到nvcc命令。

1)验证CUDA Toolkit是否正确安装 ,检查CUDA Toolkit的安装目录。默认情况下,CUDA通常安装在/usr/local/cuda-12.6(根据您安装的版本不同,路径可能有所变化),查看nvcc是否存在

ls /usr/local/cuda-12.6/bin/nvcc我的是存在的,证明CUDA虽然安装成功,但需要加入环境变量

2)配置环境变量

编辑shell配置文件(如~/.bashrc或~/.zshrc),添加以下内容:

export PATH=/usr/local/cuda-12.6/bin${PATH:+:${PATH}}

export LD_LIBRARY_PATH=/usr/local/cuda-12.6/lib64${LD_LIBRARY_PATH:+:${LD_LIBRARY_PATH}}3)保存文件后,重新加载配置

source ~/.bashrc4)测试是否配置成功

which nvcc

# 应该输出类似于/usr/local/cuda-12.6/bin/nvcc的路径。如果没有输出,说明PATH未正确配置。nvcc -V# 输出:

nvcc: NVIDIA (R) Cuda compiler driver

Copyright (c) 2005-2024 NVIDIA Corporation

Built on Thu_Sep_12_02:18:05_PDT_2024

Cuda compilation tools, release 12.6, V12.6.77

Build cuda_12.6.r12.6/compiler.34841621_0至此,CUDA完整安装成功

8、wsl2 子系统可视化

1)安装之前,

2)wsl 子系统需要的操作

# 安装xfce4

sudo apt install xfce4 # 失败重新安装 sudo apt reinstall xfce4# 再bashrc最后一行添加如下内容并保存,把其中的172.30.16.1换成自己的ipv4地址即可,可以在windows 终端中输入 ipconfig 进行查看

sudo vim ~/.bashrc

# 需要加入的内容

export DISPLAY=172.30.16.1:0

# 重载配置文件激活

source ~/.bashrc#重启一下wsl子系统,具体方法是,在windows终端里运行以下指令,再重新打开

wsl --shutdown # 关闭wsl

wsl -d Ubuntu-22.04 # 再启动#使用sudo 启动 Xfce 会话:

sudo startxfce43)安装 VcXsrv X Server 并启动它,地址为:VcXsrv - Open-Source X Server for Windows

一键安装后启动时需要进行配置:

参考资料:

CUDA on WSL User Guide

win11安装WSL2并配置Ubuntu环境

使用 WSL 访问网络应用程序

wsl2使用宿主机网络代理

WSL2的镜像网络模式:带来更流畅的Linux开发体验

新版 WSL2 2.0 设置 Windows 和 WSL 镜像网络教程

Windows 10 安装配置WSL2(ubuntu20.04)教程 超详细

WSL默认安装目录